Quarkus LangChain4j

Build intelligent, AI-infused applications by integrating Large Language Models into your Quarkus services with a declarative, developer-friendly API.

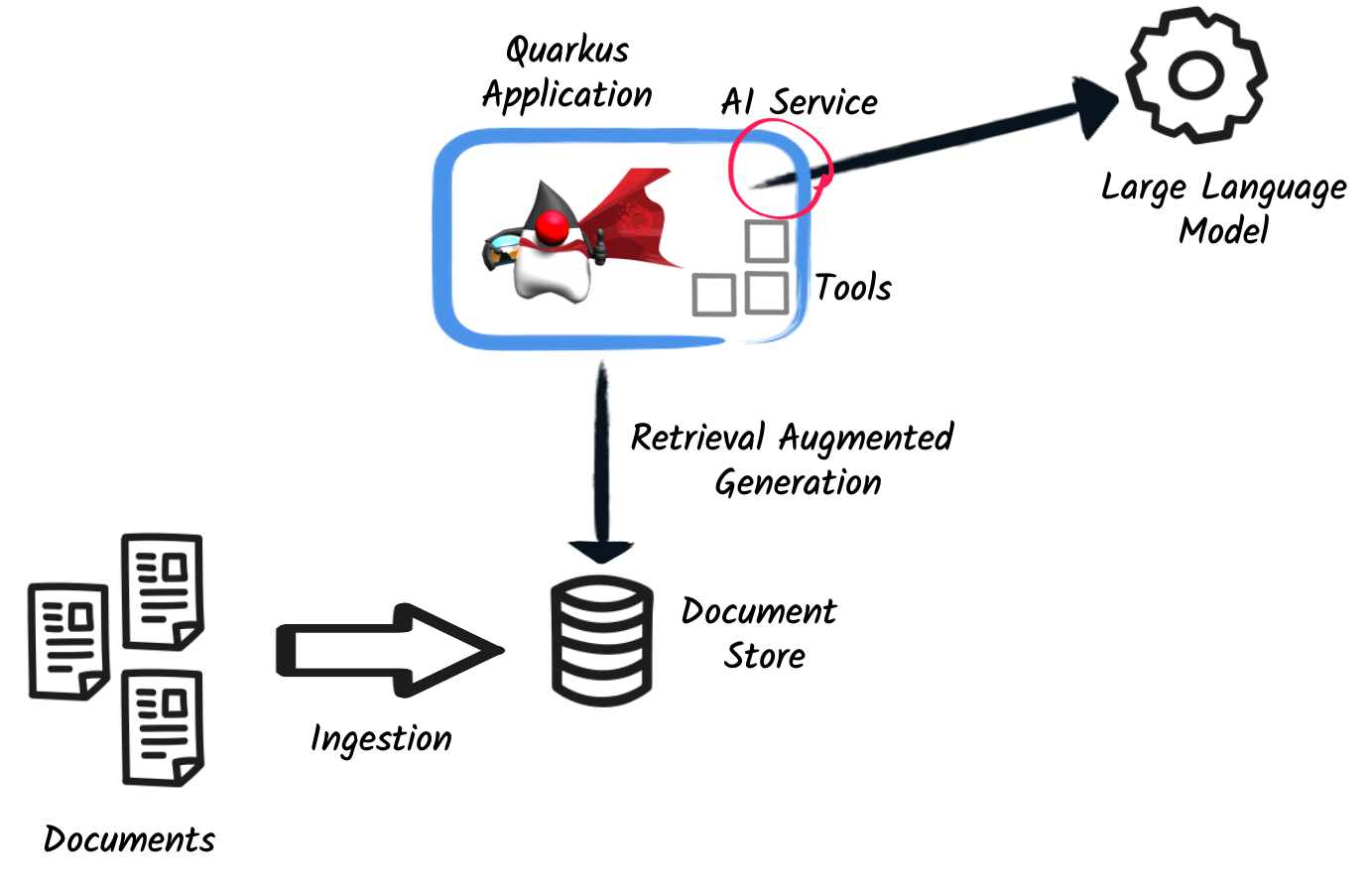

The Quarkus LangChain4j extension integrates Large Language Models (LLMs) into your Quarkus applications, enabling use cases such as summarization, classification, document extraction, intelligent chatbots, and more — all with Quarkus-native performance and developer experience.

Why Use Quarkus LangChain4j?

-

✅ Declarative AI services: Use Java interfaces and annotations to call LLMs with minimal boilerplate

-

🔋 Optimized for Quarkus: Fast startup, native-image ready, low footprint

-

🧠 Support for tools (function calling), agentic patterns, and chat memory: Easily create AI services that can invoke backend functions, maintain context, and handle complex multi-turns interactions

-

🔎 Powerful RAG support: Plug in Redis, Chroma, Infinispan, etc, and craft Retrieval-Augmented Generation (RAG) applications easily. You keep control over the retrieval logic and how to use the retrieved data

-

📈 Observability built-in: Logs, metrics, tracing all built-in

-

⌨️ Dev UI & Dev Services: A Dev experience sparking joy, even for AI-infused applications

-

🤖 Build for the future of intelligent applications: Leverage protocols such as Model Control Protocol (MCP) and Agent-to-Agent (A2A) to create advanced AI applications

What Can You Build?

From chatbots to intelligent document processing, Quarkus LangChain4j empowers you to infuse AI into real-world applications, all within a familiar Java and Quarkus environment.

Use it to:

Classify and triage documents automatically based on their content, ideal for support tickets, legal filings, or form submissions.

Extract structured or unstructured data from free-form text, making it easy to pull key insights from reports, emails, or contracts.

Generate content like summaries, customer responses, reports, or recommendations tailored to your business logic.

Analyze or generate images using multi-modal models, for instance, extract text from screenshots or create illustrations from prompts.

Build chat-based assistants that can guide users, invoke tools, or answer questions based on custom knowledge.

Implement intelligent search using Retrieval-Augmented Generation (RAG), combining LLMs with vector-based document stores for context-aware responses.

Where to Go Next?

Ready to dive deeper? Here are some good next steps depending on what you want to explore.

Build your first AI-powered Quarkus app using OpenAI or HuggingFace in just a few minutes. |

|

Learn how to declare and use AI services with annotations and CDI — the core of the extension. |

|

Discover how to craft prompts effectively and structure conversations for better model control. |

|

Combine your own knowledge sources with LLMs to build powerful search and Q&A systems. |

|

Try the full guided workshop with code, exercises, and step-by-step labs. |

Why not use LangChain4j directly?

This extension builds on top of the LangChain4j library and provides a declarative programming model tailored to Quarkus.

The Langchain4J library is a flexible toolkit. It provides a rich set of building blocks to work with Large Language Models, such as agents, tools, retrievers, and chains, giving developers full control over how to compose and orchestrate them.

However, when using LangChain4j directly, you’re responsible for wiring these pieces together, configuring the model clients, handling lifecycle concerns, and integrating observability, configuration, and memory management into your application. This approach is powerful, but low-level and manual.

The Quarkus LangChain4j extension builds on top of LangChain4j and brings an enterprise-grade, opinionated integration that handles much of this boilerplate for you:

-

Declarative AI services with CDI and annotations

-

Native support for OpenAI, Hugging Face, Ollama, and more

-

Centralized configuration with Quarkus config properties

-

Build-time optimization and validation

-

Observability: logs, metrics, traces

-

Dev Services for embedding stores and model servings

-

Dev UI for visual introspection and testing

-

Automatic chat memory management

This lets you focus on your application logic instead of infrastructure setup.

That said, Quarkus LangChain4j doesn’t lock you into a single model. You can mix and match:

-

Use the high-level declarative approach (

@RegisterAiService) for quick integration -

Drop down to the lower-level LangChain4j APIs when you need more control

This balance provides the best of both worlds: high productivity with the flexibility to go low-level when required.